Before and after tsunami, north of Sendai

While the likelihood remains high that the dire warnings of “experts” such as Michio Kaku and Nancy Grace won’t be realized, the other expert community (those who are knowledgeable) is not breathing a sigh of relief. At this point, the situation at the Daiichi reactors is more stable than a few days ago; however, International Atomic Energy Agency says, “The situation at the Fukushima Daiichi plant is still very serious.” You can see current news at the IAEA facebook page or web site. American Nuclear Society does a twice-daily summary. Atomic Power Review provides clear frequent updates. As I write this post, new information keeps popping up, often followed by “oops”; rapid information is not always accurate information.

At this time, many feel the most important energy issue in Japan is insufficient gasoline, heating fuel, and electricity.

Before and after the tsunami

The situation in Daiichi will be considered serious until cold shutdown is achieved for all 6 units. The World Nuclear Association, in discussing the Daini reactors, describes cold shutdown: “coolant water is at less than 100ºC [212°F]- with full operation of cooling systems”. Units 5 and 6 are in cold shutdown, according to IAEA. Units 4 – 6 were down for maintenance when the earthquake occurred, but their fuel rods need to be covered by water for some time, because the smaller fission products continue to decay and generate heat. Sources I have read predict cold shutdown will take days to months to complete.

Biggest concern Monday PM, PDT

The largest challenge today is the significant radioactivity in the water, especially around Reactor 2. Nineteen workers have now received doses greater than 100 millisievert, although below the 250 mSv dose allowed for emergencies. See bottom of this post for a discussion of units, regulatory limits, and safety.

Radioactivity measurements fluctuate rapidly, and differ widely by location.

Boiling Water Reactor

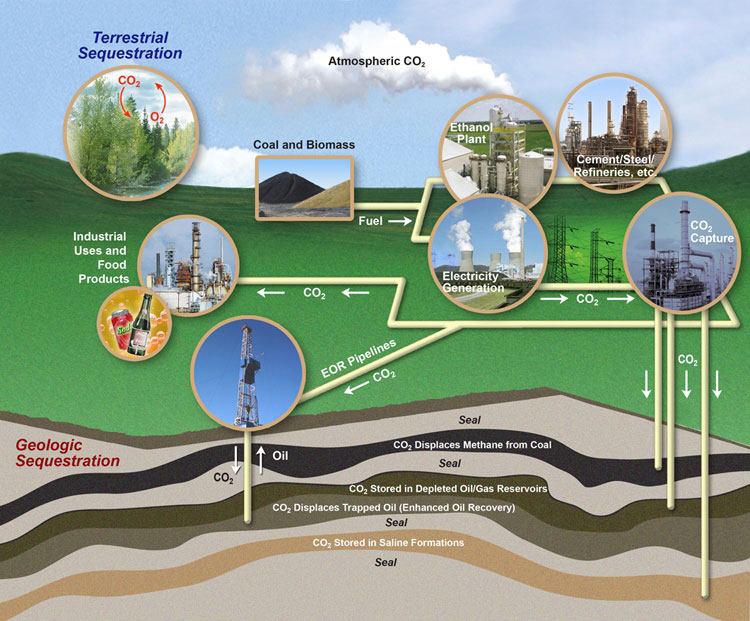

Where is the radioactivity coming from? is the question of the hour. There appears to be no major breach in the reactor walls since pressure remains high. The current best guess is leakage from the pipes between the reactor and turbine, the primary reactor loop, based on isotopic composition (actual atoms present). High levels of radioactivity in the turbine buildings (highest for reactor 2) may be coming from damaged fuel rods. Meanwhile, it is clear that diagnosis and repair are slowed by the presence of high radioactivity.

Scary numbers indicating the possible spread of radioactivity to the public (Japan Soil Measurements Surprisingly High) are hard for us in the public to sort through. As a non-expert, I cannot weigh in as to which of several possibilities (eg, surprise that this amount can be/would have been spread, or suspicions about incorrect data) is more likely. (More on the implications of one set of soil measurements, although no one, so far as I know, has confirmed the numbers.) I would be uncomfortable opting for or excluding particular options at this point.

Safety culture/journalism culture

The current analysis, which may differ from the best understanding in a few months, report several failures among the Japanese to respond to warnings about tsunamis, etc. The international community plans to discuss a variety of safety concerns. For example, the Japanese use a deterministic model, going back through hundreds of years of data to ascertain worst-case conditions that a reactor might see, while Americans use probabilistic models which result in more stringent designs. Numerous concerns have been shared by a world sympathetic to the reality that Japan has other issues on its plate right now, and the many Tepco employees carrying the burden of friends and family dead and homeless, but contradictory and insufficient information interferes with communication. Tepco perhaps should have appointed a person immediately to explain clearly what was and was not known about the state of the Daiichi plants.

Criticism of media coverage is beginning as well. Fiona Fox of BBC describes journalists who assume that those who know what they are talking about operate from bias, and so rely on the other kind of experts, those who don’t know what they are talking about. A substantial percentage of media were culpable, and we can hope that there will be meaningful media investigations, and perhaps a reconsideration of media culture.

The US has come in for criticism as well—the decision to set an 80 km evacuation zone for Americans when Japan had a 20 km zone necessarily implies that Japanese understanding is inadequate, or inadequately communicated. The one particular concern cited by Nuclear Regulatory Commission Chair Gregory Jaczko, indicating a problem with the water level in reactor 4, turned out to be incorrect, and there is worry that the evacuation decision may have been made by people insufficiently “sure of their facts”.

Putting the Danger into Perspective

The Daiichi reactors contain enormous amounts of radioactivity, more than was in the one Chernobyl reactor. Few of us have more than a basic understanding of how that radioactivity will enter the environment, or be prevented from doing so. For many of us, the problems at Daiichi fit into their “what ifs” about nuclear power, one of many scenarios just waiting to happen. (Dr. Robert DuPont, who specializes in phobias and anxieties, became interested in nuclear power after seeing media coverage for over 10 years focus on “what ifs” rather than actual reports of harm.) Still others believe that the media would not make statements in gajillion font ALL CAPS plenty of !!! without reason. (After seeing media coverage of the Scott Peterson arrest, I no longer share this belief.) The focus on the nuclear dangers at Daiichi has crowded a number of arguably as or more important topics off the front page.

So far, almost 11,000 are known dead from the earthquakes and tsunamis, and the number of missing is at above 17,000. About 200,000 remain in shelters and refugee centers.

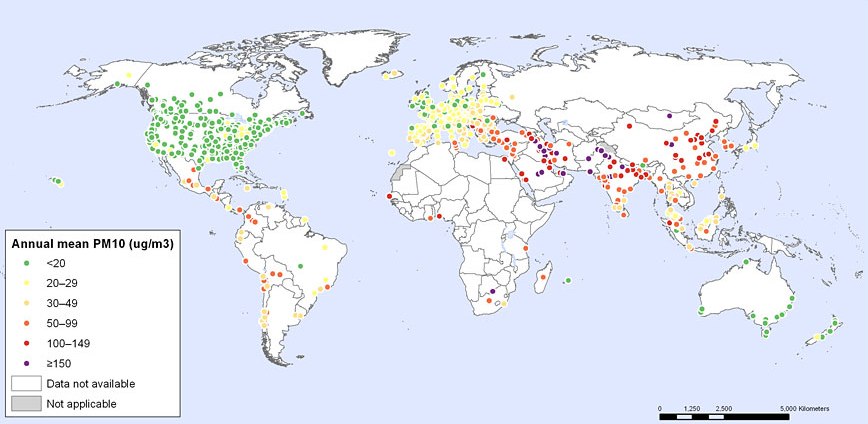

As I write this, it is 2.5 weeks since the earthquake. World Health Organization estimates that 150,000 people die worldwide from air pollution in an average 2.5-week period, and that 5,000 (pdf) died from climate change in 2000 (and perhaps more this year). To be fair, it is unreasonable to hope that the media ever devote in a year the amount of coverage to these kinds of problems as it has for the past 2.5 weeks to Daiichi.

People who could be experts—it’s hard to sort out

As always happens, there are a number of sources in the middle of a continuum that begins with Kaku and Grace at one end and continues to IAEA at the other. Two sites have been brought to my attention: New Scientist reports Fukushima radioactive fallout nears Chernobyl levels quoting Gerhard Wotawa of Austria’s Central Institute for Meteorology and Geodynamics in Vienna. Union of Concerned Scientists begin a press conference with a claim that they neither oppose nor support nuclear power, a claim unlikely to be accepted by either supporter or detractor. They then cite the same source.

At this time, estimates of the total radiation released are likely to suffer from insufficient and selective choice of data, and sometimes inaccurate data among the flood of information. The UCS warning that the total release could be several times worse than Chernobyl is especially dubious. Since UCS has a history of sensationalizing the ordinary, I personally prefer to avoid the whole process of double-checking their data and ideas, and begin with sources less prone to error.

Why the bet is still against another Chernobyl despite some numbers and comments online

The general belief remains that neither the exposure nor the health consequences will come close to that produced by Chernobyl (see IAEA’s The Chernobyl Report (pdf)). The cumulative size of the reactors at Daiichi is greater than the Chernobyl (1000 MW). However, Chernobyl was on, creating fission products, when the accident occurred. Of the six Daiichi reactors, half were off in cold shutdown when the earthquake occurred, and the others went off immediately. Fission products are still being produced, but at a much lower rate (hence the need for boron and water to absorb extra neutrons). About 3/4 of radioactivity released at Chernobyl came from xenon-133, with a 5.2 day half life. More than 3 half-lives have passed since the earthquake, so the store of xenon is down to less than 1/8 of its original levels (the daughter cesium-133 is stable). Iodine-131 was the second most important isotope, responsible for more than 90% of the remaining radioactivity. More than 2 of its half-lives (8 days) have passed, so iodine levels are down below 1/4 of the original store (the daughter xenon-131 is stable). The next 4 on the list—cesium-137, cesium-134, krypton-85, and strontium-90—were responsible for about 2% of the radioactive release at Chernobyl; their half-lives are too long for much decay to have occurred since the earthquake. (Chernobyl release from table 15.2 in David Bodansky, Nuclear Energy, 2nd Edition)

Second, there is a containment system. If sufficiently stressed, it may fail, but a sizable portion of the radioactivity will still be contained. Third, burning graphite facilitated the spread of radioactivity at Chernobyl, and no such mechanism exists in Western reactors. Fourth, the effects of Chernobyl were exacerbated by uneven distribution of potassium iodide, and inadequate restrictions on milk and other foods in the affected areas.

The worse than worst case is this: 50 – 60 dead since Chernobyl, primarily immediate deaths among the firemen (31 dead within months) and juvenile thyroid cancer (15). There have been thousands of thyroid cancer to date, and there may be 4,000 more deaths from the initial exposures to radioactivity over the next few decades. Pretty horrible. At this point, the expectation is that Daiichi will not come close to being that dangerous.

Economically, the cost is likely to be high. Three reactors are ruined, and will need to be replaced at a time when Japan has a shortage of electricity. If there is substantial leakage of radioactivity, remediation of some land may be necessary. Overly rigorous regulatory standards for safe levels of radioactivity may result in throwing away food with low levels of danger.

Not as bad as Chernobyl, but Daiichi could still produce deaths and enormous costs, and the situation is far from resolved.

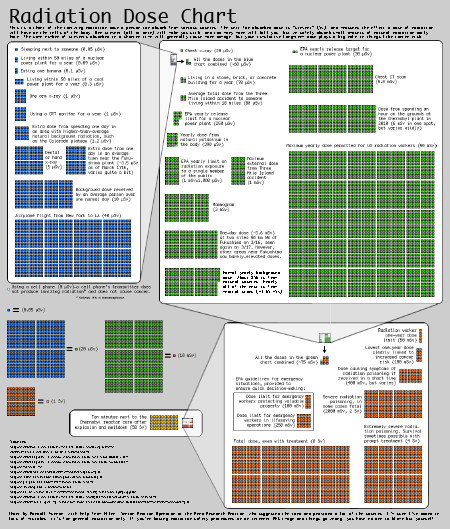

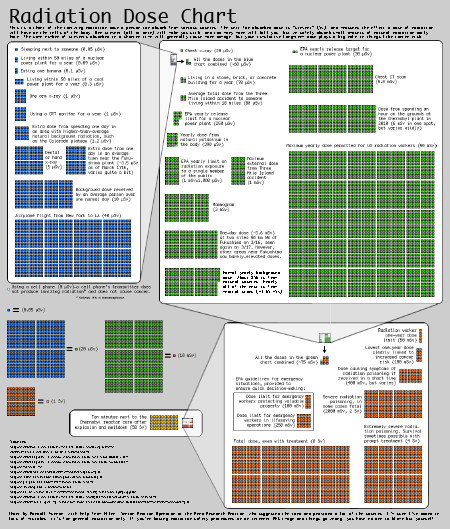

Explaining units, an introduction

The most frequently used unit for dose is sievert, Sv. Unfortunately the US, per usual, has a completely different set of units, but fortunately the conversion is easy: 1 Sv = 100 rem. Milli and micro indicate 1/1000 and 1/1,000,000. Unfortunately, there are other units used by the media, such as curie, becquerel (Bq, decays per second), and from Geiger counters, counts per minute. For a more complete look at units, see Measuring Radiation. The sievert is not quite decay rate, rather it is decay rate is multiplied by a scaling factor between 1 (X-rays, gamma rays, and electrons—beta particles) and 20 (alpha particles and fission fragments) to reflect the degree of actual damage. There are about 9,000 hours in a year, so multiply dose per hour by 9,000 to get a sense of magnitude. Readers will welcome any explanation that makes sense of Bq/square meter and “times normal” as many of us don’t know what normal is.

Radiation chart

Click to view in full

How much is safe? Officially, there is no safe level. Typical American background is 3 mSv/year, and Americans are exposed to another 3 mSv/year from medical procedures (although this may be an underestimate (Science subscription needed)). Yet we do not receive travel advisories when we visit Denver (>2 times US average), let alone parts of India, Norway and Brazil where background levels might be >35 times the US average, or Ramsar, Iran, a resort where background radioactivity is about 100 times the US average.

Dose rate matters. For a large dose in a short time, the powers that be (eg, National Academies Biological Effects of Ionizing Radiation) deem risk coefficients at 0.08/sievert for workers and 0.1/sievert for the general population, which includes children and older people. So for every sievert exposure to a group from the general population, 0.1 death will result. When dose or dose rate is smaller, many groups recommend dividing this risk coefficient by 2. According to the Linear No Threshold model, 1 person receiving a 10 Sv exposure will die. One person out of 10 each receiving a 1 Sv exposure will die. One person out of 100 receiving a 0.1 Sv exposure will die. Between 0.5 and 1 person out of 10 million each receiving a 1 microSv exposure will die.

Regulatory standards can be set at very different safety levels. For radioactivity, the risk at the level regulated appears to be low.

From the Japanese prime minister’s office:

Japan’s provisional standard values for the radioactive levels of agricultural products including vegetables have been set based on the standard values established under the International Commission on Radiological Protection (ICRP). The provisional standard values are precautionary measures. Even if a person continues to intake the radioactive levels exceeding the Japanese provisional standard values for one year, it would not pose risks to the health.

This is not always true: the US standard for drinking water was 50 micrograms/liter, leading to 1 out of every 1000 people developing bladder cancer. (This is now 10 microgram/liter to lower danger below 1 in 10,000. The problem is greater where ground water is used, and in the West.)

March 16, two weeks ago, Environmental Protection Agency (pdf) proposed rules that will not eliminate the effect of coal on human health, but would help, by preventing “up to 17,000 premature deaths, 11,000 heart attacks, 120,000 asthma attacks, 12,200 hospital and emergency room visits, 4,500 cases of chronic bronchitis, and 5.1 million restricted activity days.”

It’s not over

One advantage that the coal industry has in explaining many of its tragedies is that they tend to be over fairly soon, and are often easier to explain.

We can only hope that the various challenges facing the Japanese—cold, homelessness, fear, and Daiichi—are over soon. Dealing with all the challenges, though, may require a long wait. Across Japan, and at Daiichi, it is likely to take a while to understand the full extent of the damage.

Note: I am a lay person. Corrections welcome!

Update: decay chain daughters added, added link to Atomic Power Review, added comment from Japanese prime minister’s office